This is the landing page for the ECCV 2018 paper Interpolating Convolutional Neural Networks Using Batch Normalization.

Abstract

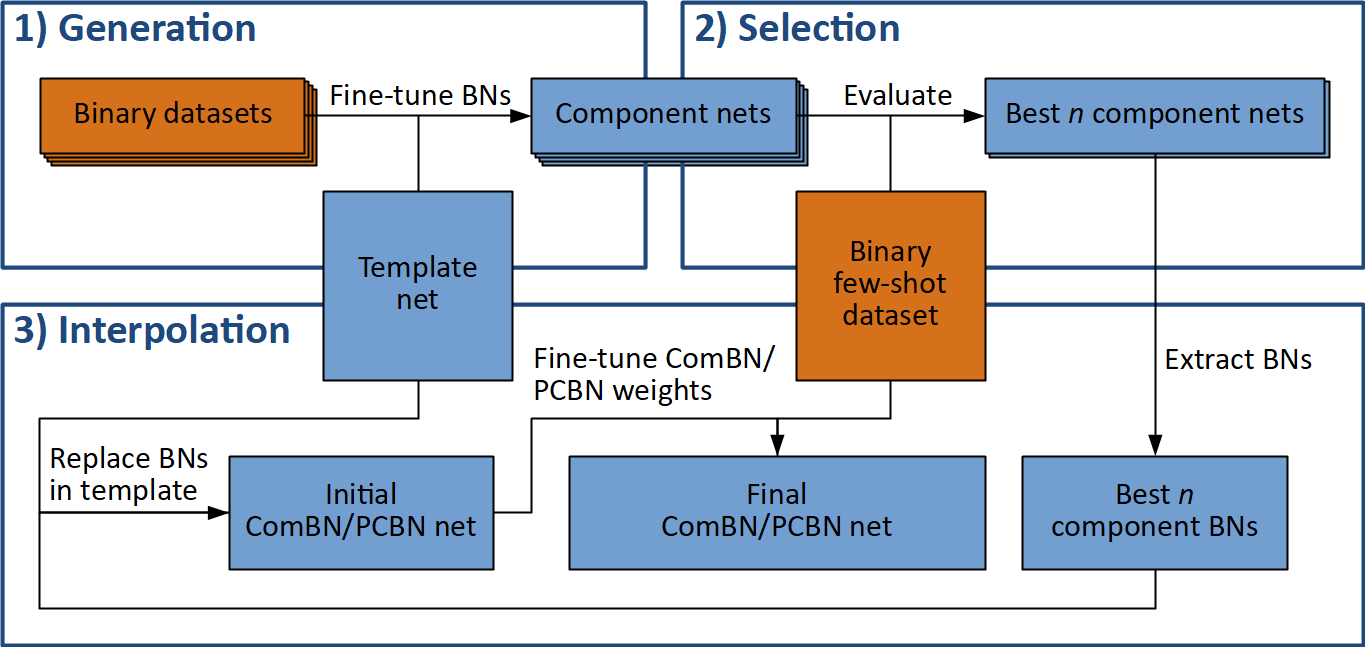

Perceiving a visual concept as a mixture of learned ones is natural for humans, aiding them to grasp new concepts and strengthening old ones. For all their power and recent success, deep convolutional networks do not have this ability. Inspired by recent work on universal representations for neural networks, we propose a simple emulation of this mechanism by purposing batch normalization layers to discriminate visual classes, and formulating a way to combine them to solve new tasks. We show that this can be applied for 2-way few-shot learning where we obtain between 4% and 17% better accuracy compared to straightforward full fine-tuning, and demonstrate that it can also be extended to the orthogonal application of style transfer.

Code

The code for reproducing results in the paper can be obtained from the GitHub repository.

Citation

BiBTeX:

@inproceedings{Data2018,

author = {Data, Gratianus Wesley Putra and Ngu, Kirjon and Murray, David William and Prisacariu, Victor Adrian},

title = {Interpolating Convolutional Neural Networks Using Batch Normalization},

booktitle = {European Conference on Computer Vision},

year = {2018},

month = {September}

}

Plain text:

G. Wesley P. Data, Kirjon Ngu, David W. Murray, Victor A. Prisacariu, “Interpolating Convolutional Neural Networks Using Batch Normalization,” in European Conference on Computer Vision, 2018